Everyone knows what virtual reality is. At the very least, people know it’s something that involves wearing a headset, and experiencing something other than reality. “Mixed Reality” is a much less familiar term, and before diving deeper into it, I’ll provide a quick description of what I mean by it (the definitions tend to vary).

My first introduction to mixed-reality was through this very cool trailer, made by Framestore CFC for Tilt-Brush, a VR based 3D drawing application made by Google.

At first, I thought it was merely a clever use of visual effects to depict the VR user-experience, meaning a team of visual effects artists took an existing pre-recorded video of the user and added computer graphics that represented (maybe very closely) the content that the user saw in their headset. And I happened to be right in that case. But right after, I saw this Fantastic Contraption Mixed Reality Gameplay Footage, made by Kert Gartner. Kert is one of the early pioneers of mixed reality (and was kind enough to help me in my early research), and his videos clearly show graphics taken directly from the virtual reality application combined with video footage of the user.

What I found most exciting in Kert’s videos was how freely the camera was moving around the user, and how “planted” the user was in the CG environment - a feat that visual effects artists call “camera tracking,” which is traditionally done either using expensive infrared sensors mounted on trusses around a movie sound-stage, or at a later stage on the computer using high-end visual effects software. Even still, given how laborious it can be to achieve a seamless camera match, camera tracking is often avoided in low-budget productions.

The cool thing about moving the camera around a virtual reality user, I realized, is that the same head tracking technology that is crucial for a room-scale VR experience (the small infrared sensors dotting the HTC Vive headset and controllers) can be utilized to accurately track a camera as well. In layman’s words, camera tracking is a byproduct of any room scale VR setup. Moreover, it works in real-time, with extremely low latency.

Kert actually documented his process in a few cool behind-the-scenes videos that greatly inspired me.

Around that same time, a producer came to me asking if I could create something similar to the Tilt Brush trailer (using the Tilt Brush app), and that’s when I decided to roll up my sleeves and give this process a shot myself.

I had very recently bought my first VR kit, HTC’s Vive which includes a headset, two “Vive controllers” (the VR equivalent of a joystick) and two “base stations” (infrared beacons used to pinpoint the headset and controllers’ position in the room).

In order to track my physical camera using the Vive system, I would have to attach one of my controllers to the camera and have the computer move a “virtual camera” based on that controller's’ position and orientation (aka “transformation”). Luckily, just a few days earlier, a third Vive controller arrived in the mail (I ordered it after incapacitating one of my original controllers by thrusting it into my living room wall while playing VR). In the meanwhile I had managed to fix my broken controller so I now had three working controllers. Coincidence?

It sounded simple in theory, but there were a few immediate obstacles:

To overcome the first obstacle, I used Tribal Instincts’ brilliant “configurator” tool. Tribal Instincts is a Seattle-based software developer who runs a popular YouTube channel where he reviews VR apps and discusses technology. He created a handy Unity3D-based application that streamlines the process of correcting the offset between the camera and the controller. You still have to eyeball it to a certain extent but it saves hours of blind trial-and-error.It also has useful instruction on how to actually feed that offset information into the VR program so that it uses it properly.

Connecting the Vive controller wirelessly required buying a Steam controller wireless receiver, and following some steps described in this excellent article written by Kert.

And once the VR software detected the third controller, I was pleasantly surprised to discover it automatically switched to a hidden “Mixed Reality” mode, splitting the screen into four quadrants, each containing a video layer that can be used by a compositing software to bake together a mixed reality video.

Using a third controller and roughly calibrating it to my USB camera, I was able to film my very first Mixed Reality video.

It was very rough indeed. I had no green screen so I had to key my subject (my girlfriend at the time) against the white wall which is never a good idea, and I literally held the USB camera and controller together in my hand to keep them somewhat aligned. But I could see the potential and I knew that with a proper green screen and camera rig, I could pull it off.

Unfortunately, the producer who reached out to me was on a very tight schedule and had to go with a non-mixed reality approach.

Now that I made a promising first step, my appetite for properly capturing mixed reality grew, and I started reaching out to colleagues who had access to green screen stages.

One of them picked up the glove and let me use a small green screen stage at Disney Interactive for my next go.

While testing mixed reality on various VR apps on that stage, my host brought up a need he was hoping to achieve: capturing two VR users or more, occupying and interacting in the same VR space.

This challenge inspired me to contact another colleague, a producer at the YouTube Space in LA, where I knew they had two green screen stages next door from each other.

My first goal was to try and create “co-presence,” meaning the two users would be physically separate, filmed from matching angles so when I combined the two camera feeds with the virtual environment, they’d look like they’re sharing the same space.

I chose “Eleven:Table Tennis” as the VR application to test on because it had a multiplayer match mode and an inherently fixed play area that doesn’t let users “teleport” (change their position in space without physically walking). It was also very helpful to have live communication with the developer as some last-minute code adjustments were required to get the mixed-reality to work properly in multiplayer mode.

Because both cameras had to be perfectly aligned for the illusion to work, I had to put both cameras on tripods and keep them static; any movement of one camera that wasn’t immediately matched by the other camera would break the illusion that they’re together in the same space.

Of course, being able to move the camera freely was part of the original allure of mixed-reality for me.But the only way to live-sync the motion of two cameras in different spaces would be using two robotic arms which mimic each other’s movements perfectly and simultaneously (and I didn’t have freely available access to two robotic arms).

The only practical way I could move a camera freely while capturing a multiplayer VR session would be if both players shared the same physical space. This is something that is definitely possible and successfully implemented on a daily basis by VR arcades such as ILMxLabs’ The Void and Imax VR. But most VR applications didn’t offer a simple way to synchronise two separate VR headsets within the same space (since they assume home users have only one headset). Another limitation was the play area of the Vive being limited to around 15’x15’ - so a game like “Eleven,” where the opponents are separated by a virtual table, wasn’t an ideal testing bed.

But there was another VR game that I thought might be a perfect candidate for such same-space multiplayer experiment, Racket:Nx, developed by long time colleagues of mine at One Hamsa studio.

Racket:Nx is a sci-fi enhanced racquetball simulator where the opponents stand next to each other and take turns racketing a ball towards a 360 degree dome wall, with the goal of hitting targets, collecting power-ups and avoiding traps.

I had been keeping One Hamsa posted on my experiments (earlier one even featured their game), and they became similarly excited about possibly capturing their multiplayer mode in mixed reality.

Coincidentally, one of the organizers of VRLA, an annual VR conference in LA (who came out to help with my co-presence experiment and even appears in the demo video herself) had kindly offered us a last minute booth space at the upcoming VRLA conference.

I felt like VRLA would be the perfect venue for an interactive demonstration of a multiplayer virtual reality gaming experience using mixed reality, especially given my last year experience attending the conference.

Being a lifelong fan of gaming technology and equally enthusiastic of this incarnation of virtual reality, I was ready to be mind blown by the exhibit floor attractions. Instead, when I got there I witnessed long stagnating lines leading to small dark rooms where people stood, one at a time, with headsets on. Rarely did I get a glimpse at a monitor showing what they were seeing, and it was very difficult to gauge whether they enjoyed the experience. I figured I’d wait for someone to exit a booth and ask them if it was worth it but, after waiting for a few minutes next to one of the booths, I gave up and moved on to see things that didn’t require waiting in a long line.

I was disappointed, not so much for my own experience, but because I knew this kind of first impression could be destructive to the VR community - especially at such early stages of market infiltration. I felt there was an urgent need to show the VR community better ways to “tell the story of VR,” and especially the largely overlooked (yet very promising) multiplayer VR experience.

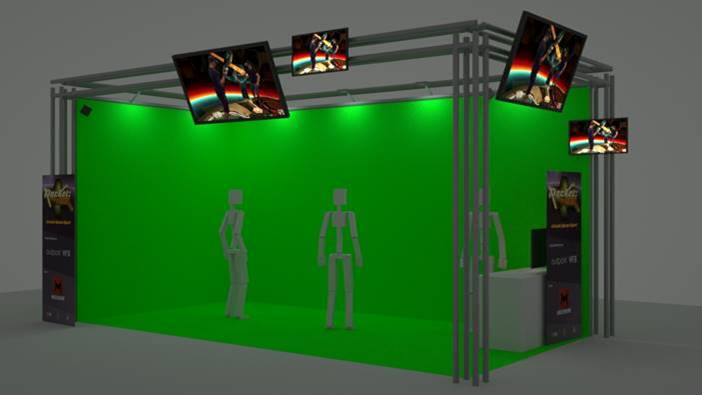

By coordinating between One Hamsa and VRLA, I was on way to create the first of its kind, VR multiplayer mixed reality demonstration booth. The road was filled with organizational and technical challenges, and required financing from Keshet International, technical and labor support from Machinima Studios, as well as the supportive crew of VRLA and Blaine Conference Services.

But throughout the preparation, and despite logistical and financial limitations, I fought to ensure our booth would check three basic boxes:

We made it fun and exciting by:

We made the mixed reality feed prominent by playing it on two big LCD screen, which were mounted on a 12-foot truss right above the play area, which were facing the two prominent pathways leading to our booth.

And we made the mixed reality work, with camera moving and multiplayer mode active, by tackling a variety of technical obstacles, one at a time, not only before but even during the 2-day event itself, with the programming crew preparing a fresh update between the first and second day (luckily they were on a 10-hour time zone distance so our night was their day).

When the doors opened to the 2017 VRLA expo, our booth was standing tall, ready to welcome the thousands of attendees. Our position at the very front of the showroom next to the main entrance meant either our success or failure would be displayed in full glory.

Luckily, it was a success.

Within minutes, a crowd formed in front of our booth, not only of people eager to play the game, but of bystanders who weren’t in line but simply watched the mixed reality feed on the TV screens above. In fact, the crowd of spectators and the line to play in the booth were so big that we were asked by the organizers to use set up stanchions and prevent the line from blocking the entrance to the hallway. That was definitely a nice problem to have, and a good sign that we were doing something right. During the event, I went around and shot some crowd reactions, as well as behind-the-scenes timelapses of the creation of the booth, which we ended up editing together with mixed-reality gameplay captured in the booth into a short sizzle clip.

The programmers of Racket:Nx really went out of their way and coded a special “MR” build of the game for the VRLA exhibit, constantly adding functions that improved the quality and ease-of-use in capturing the game for mixed reality. Here are only some of the technical issues they were faced with:

At the exhibition floor, our booth was an instant hit. Fellow presenters and VR professionals reacted very positively to the activation and its effectiveness in showcasing the multiplayer experience. However, as inspired and impressed as they were, it was difficult for many to see how to apply this in promoting their own VR experiences.

It might have been connected to the fact that there weren’t many sport-centric VR titles presenting at VRLA (Maybe the LA crowd is more narrative oriented, or the VR industry as a whole is trying to distance itself from the video-gaming market and its dominant leaders).

Among the few who did inquire about using mixed-reality to promote their titles, few could afford a one-day mixed reality production session, let alone a multi-day exhibit floor booth.

While writing this post, I reached out to Kert Gartner, whose early mixed reality trailers had inspired me to get into the field, and asked about new mixed reality work. His answer:

“I haven’t created any new Mixed Reality trailers since they’re such a pain to set up, and I don’t think they’re necessarily the best way to showcase a VR game. I’ve been focusing more on avatar based trailers, which are much simpler to produce and in a lot of cases, produce a much nicer result!”

I share Kert’s experience of it being a pain to set up mixed reality. However, VR developers are already creating new tools for standardizing mixed reality both on the developers’ and users’ ends. In researching for this blog post Matan Halberstadt, one of Racket:Nx’s developers, referred me to LIV, a VR tool that “everyone is using today for streaming mixed reality. It's much simpler to setup and it replaces OBS by compositing the 4 quadrants”.

Is part of the allure of some virtual reality experiences, the fact that we get to embody virtual avatars who look nothing like us, and therefore the action of mixing a real image of ourselves with the virtual reality experience undermines what we’re trying to sell? I can see that being the case in some cases, but definitely not all cases, especially when it comes to VR e-sports like Racket:Nx.

Perhaps being able to see users inside the virtual environment doesn’t cut it so long as we can’t see their facial expressions? After all, there’s something alienating about not being able to see a person’s eyes. Google Machine Perception researchers, in collaboration with Daydream Labs and YouTube Spaces, have been working on solutions to address this problem wherein they reveal the user’s face by virtually “removing” the headset and create a realistic see-through effect:

No doubt this feature will be available to mixed reality creators sometime in the near future. Will this open the flood doors for mixed reality content?

The topic of virtual reality’s sluggish market growth fuels hot debates and keeps most VR developers up at night. At least as of late 2018, virtual reality is still struggling to build a user base large enough to justify significant investment in content.

From the very beginning (of this incarnation of VR), market observers pointed out a chicken and egg paradox, where users were assumed to be waiting for more VR content, and VR developers were assumed to be waiting for more users. However, over the past couple of years, the VR marketplace has seen constant growth in content, including A-list game titles like Fallout 4 coming out with VR versions, and yet the general population, including gamers, still don’t seem all too motivated to get desktop VR headsets into their homes.

I mentioned at the top of the article that most people know VR as something that involves wearing a headset and experiencing something other than reality. Perhaps being able to look down at our smartphones, interact with friends who came to visit, or simply look after our kids, is more important to most of us than being fully immersed in entertainment and blocked from our real surroundings. Maybe we’re still waiting for that game-changing VR tool that will tip the scales and convince people that the rewards justify the sacrifices.

Maybe it’s simply that we haven’t figured out how to properly market VR? One of the challenges in introducing VR to the world is that unlike a new film, which can be previewed on any TV screen, tablet or smartphone, there is no way to convey the essence of a virtual reality experience without being in VR and getting a taste for it.

This is another chicken and egg paradox that has made some developers focus on VR arcades for the time being. In the meanwhile it seems as though headset manufacturers acknowledge the importance of mixed reality composites in their promotional materials.

Magic Leap, which recently revealed its long-anticipated augmented reality (AR) headset, is betting its entire existence on the idea that users prefer their virtual-reality content to co-exist with their surrounding reality. Not surprisingly, in the trailer for their demo-experience “Dr. Grordbort's Invaders”, the final sequence mixes real and virtual elements to illustrate the user experience.

I found it strange that the trailer chose to point out that it’s a single-player experience, by showing the player’s friends mocking him as he fights his “imaginary enemies” which they can’t see.

Even worst - the player is depicted as being oblivious to his friends’ mockery, thus short selling the key advantage AR has over VR - maintaining users’ connection to the physical world and their friends. Being able to see each other and experiencing VR together (perhaps competing against each-other in the living room), is easily the single feature many current VR holdouts are waiting for. Taking a well-known achilles heel of desktop-VR and suggesting that it persists in AR as well (where in fact it technically doesn’t) is nothing short of self-sabotage.

Alas, as with other innovative tech-products in the past, the technology will mature and someone will inevitably crack the formula and achieve mass-adoption. There’s little doubt that whatever VR platform will ultimately take off, mixed reality will play a big role in transforming it from a niche gadget to a much-desired entertainment product.

Until that happens, mixed reality will continue to evolve as a “virtual cinematography” tool for filmmakers, and an interactivity tool for video artists, who are sure to inspire us with ever more creative utilizations of this widely accessible and incredibly powerful tool.